Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models

Pan Lu1,

Baolin Peng2,

Hao Cheng2,

Michel Galley2,

Kai-Wei Chang1,

Ying Nian Wu1,

Song-Chun Zhu1,

Jianfeng Gao2

1University of California, Los Angeles

2 Microsoft Research, Redmond

💥 Accepted to NeurIPS 2023.

💥 Best Weekly AI Paper (by AlphaSignal, 1st in 1682, 0.06%).

📝 Paper 💻 Github 🎥 YouTube 🔥 Coverage 🐦 Twitter 📸 Poster

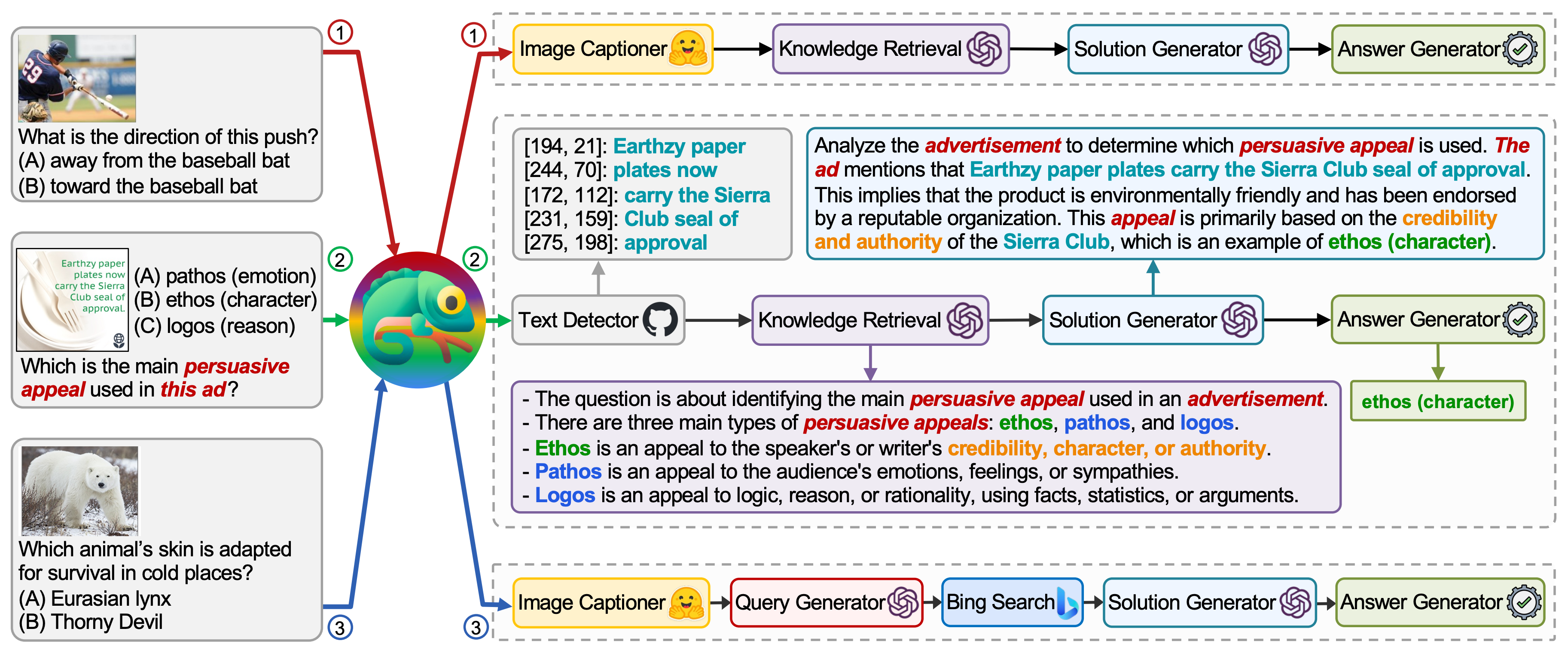

Examples from our Chameleon with GPT-4 on ScienceQA, a multi-modal question answering benchmark in scientific domains.

Chameleon is adaptive to different queries by synthesizing programs to compose various tools and executing them sequentially to get final answers.

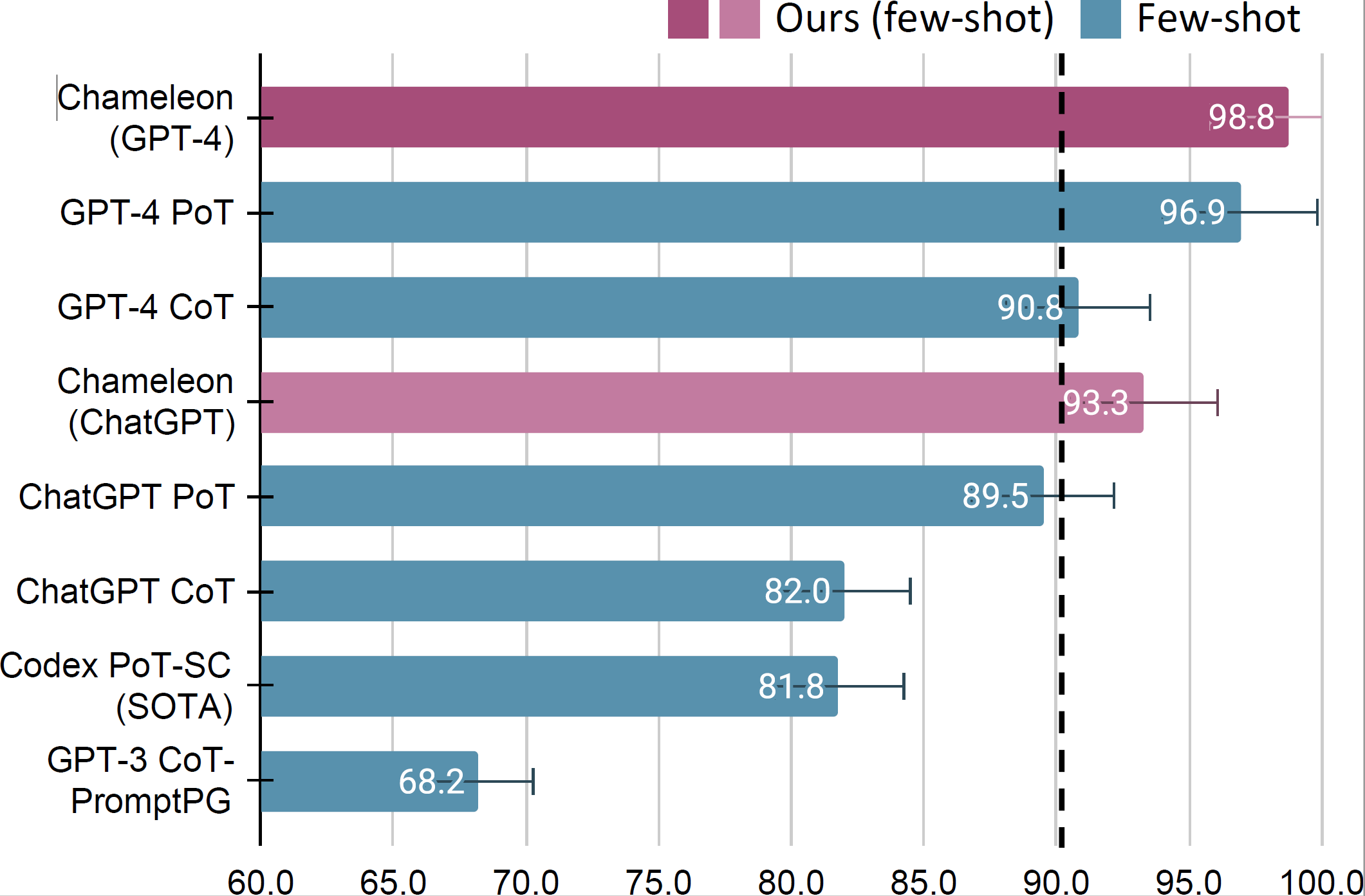

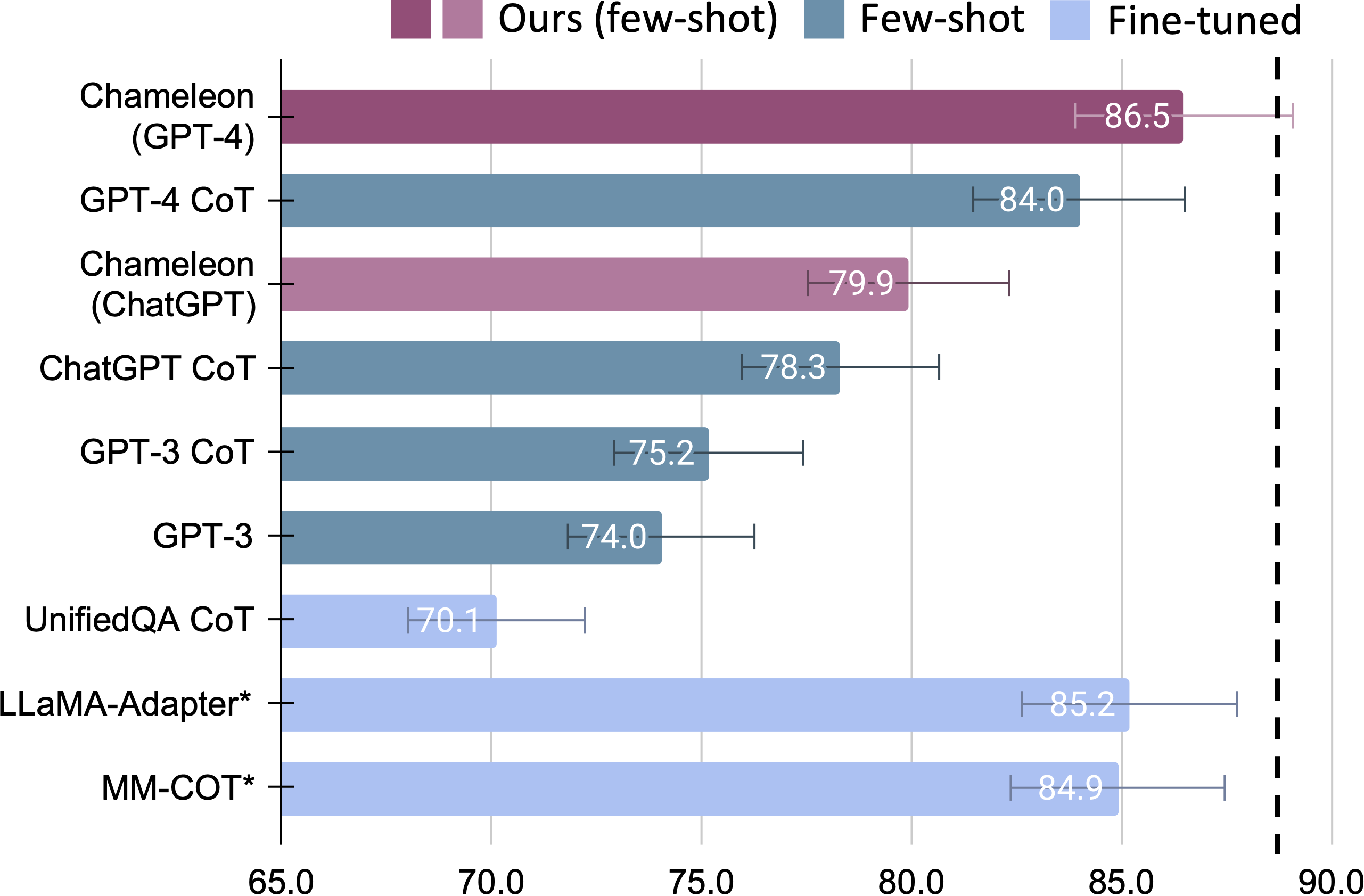

Significant improvements are observed for Chameleon over both fine-tuned models and few-shot prompted GPT-4/ChatGPT

TabMWP

ScienceQA

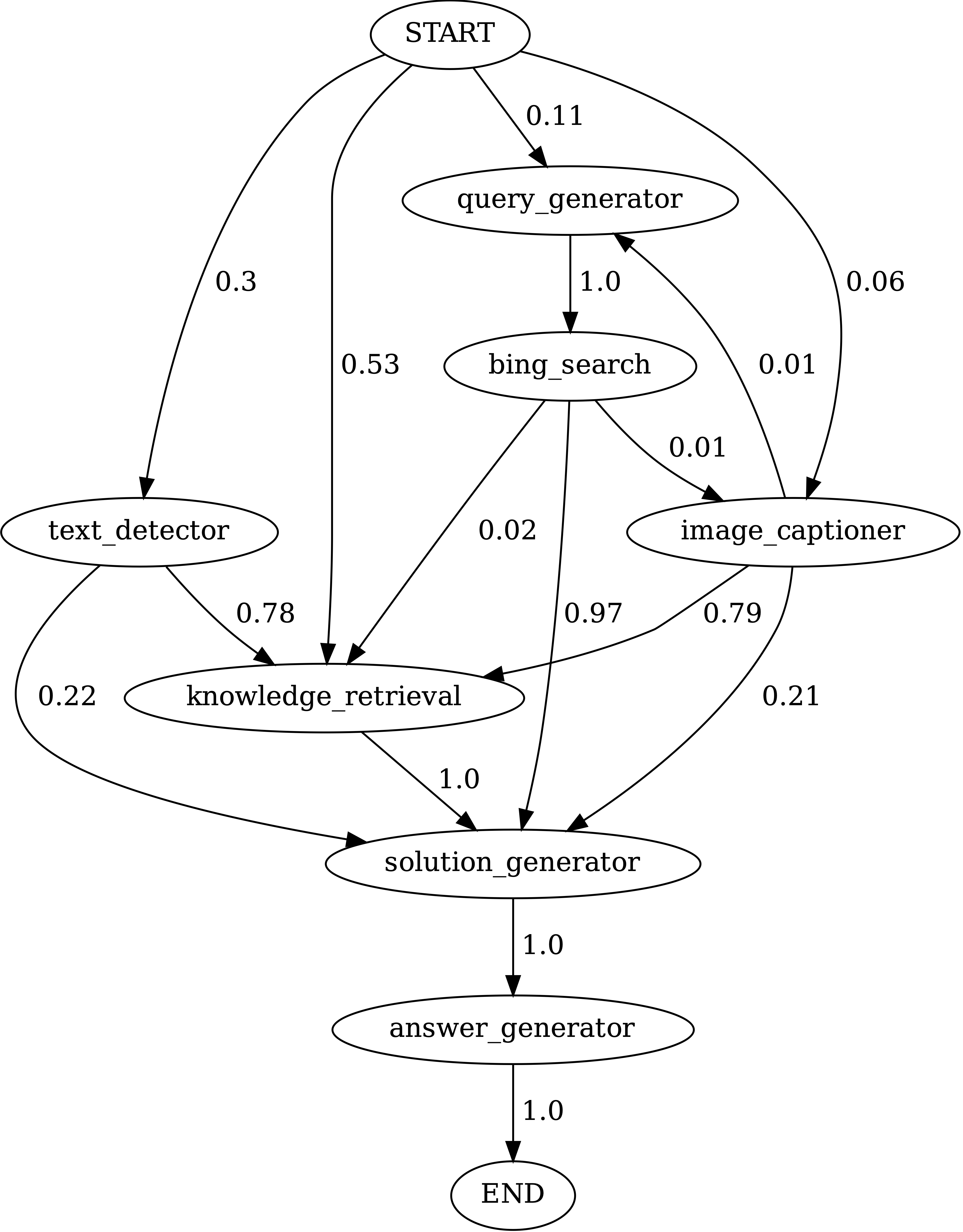

What Plan does Chameleon synthesize?

- The GPT-4 planner is capable of making good decisions on how to sequence tools in a few-shot setup.

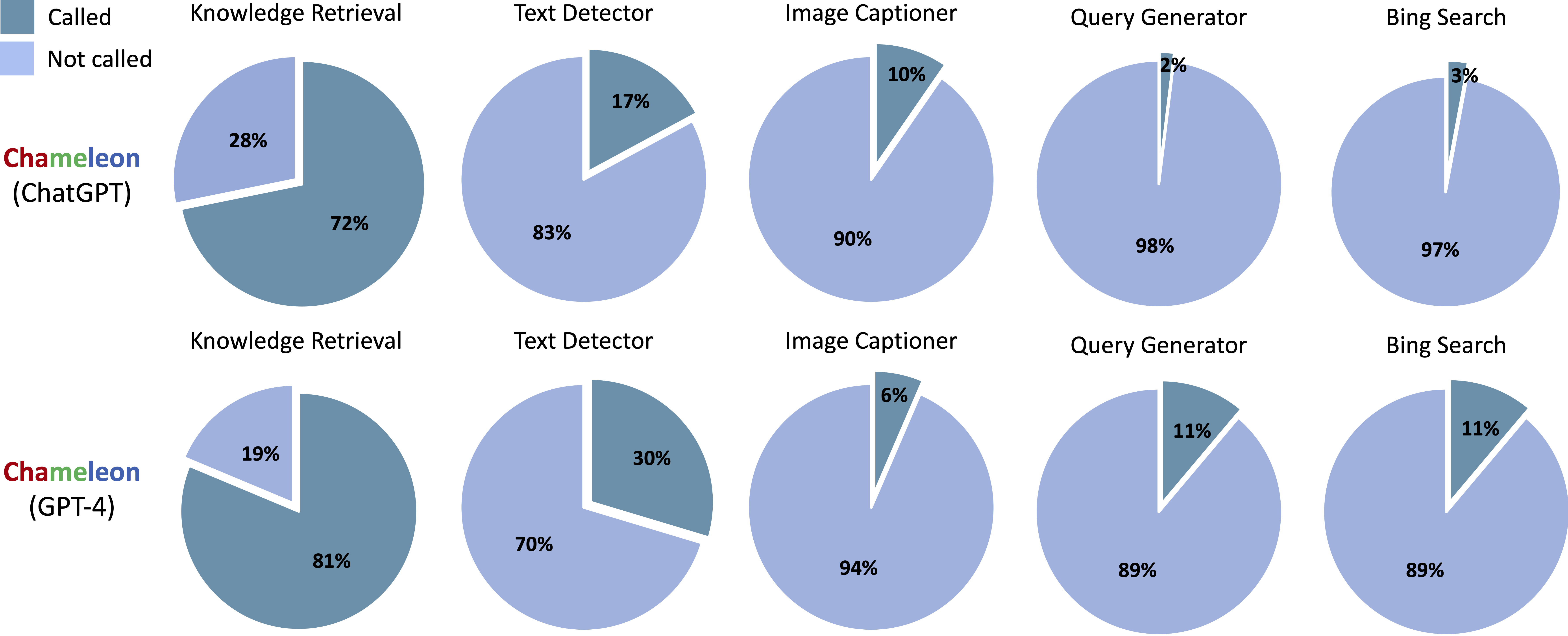

- For ScienceQA, GPT-4 often relies on either the knowledge retriever or Bing search, but rarely both.

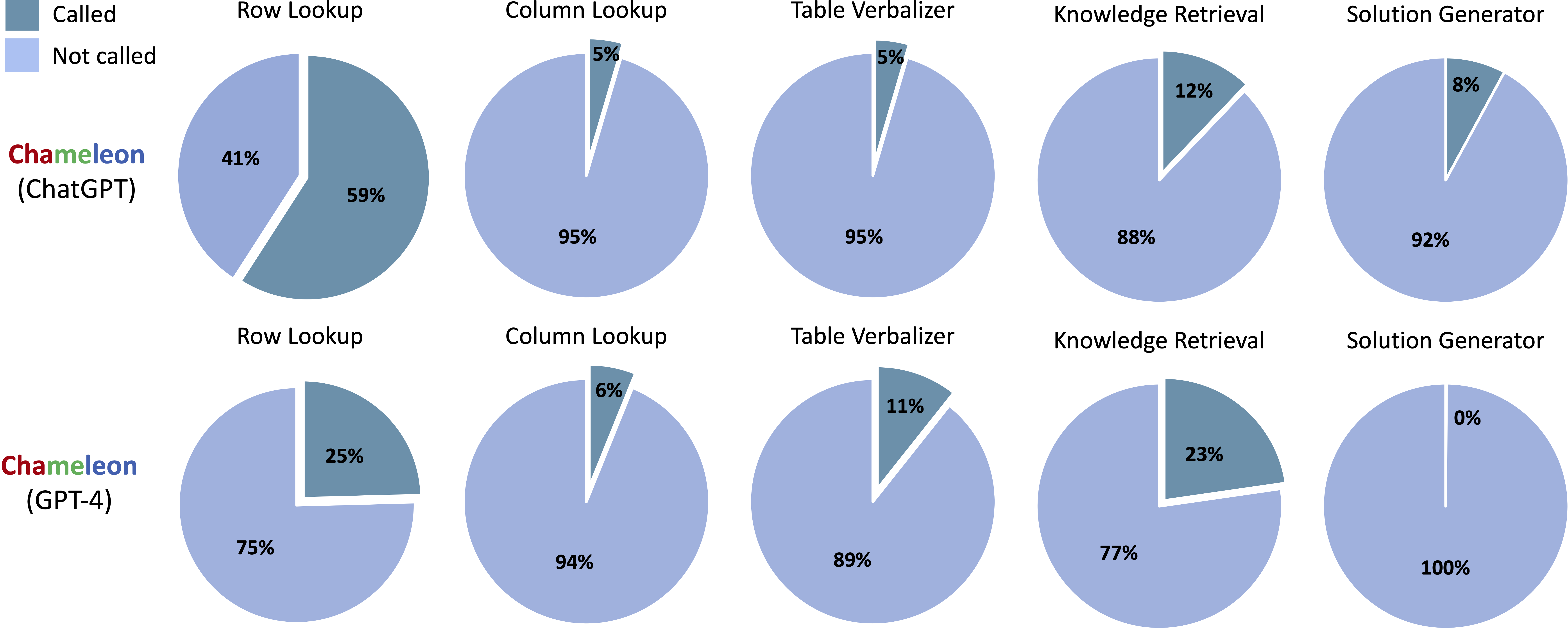

- On TabMWP, there are two main modes observed: either going through the solution-executor module or via the program verifier and executor.

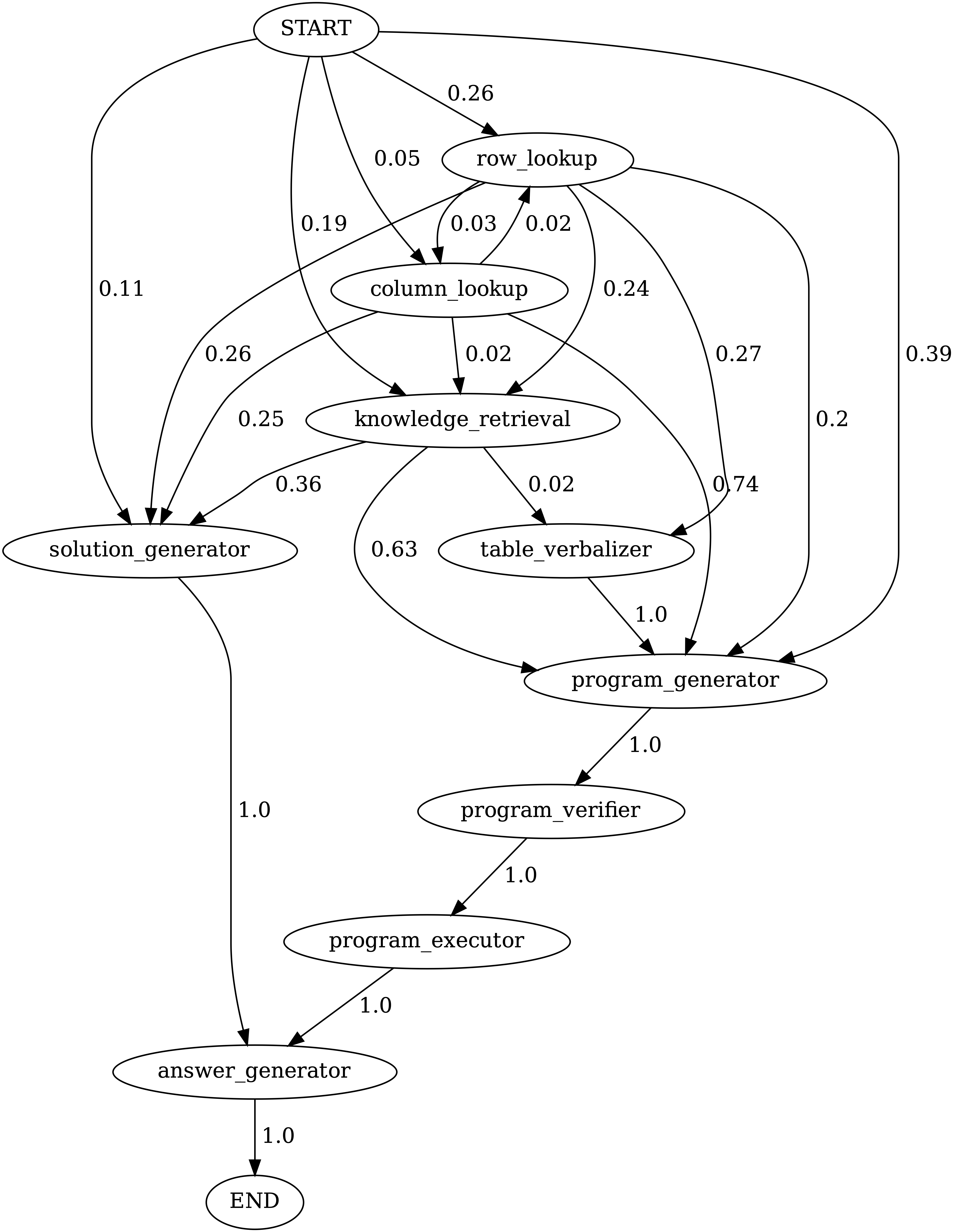

Transitions between modules in programs generated by Chameleon (GPT-4) on ScienceQA. START is the start symbol, END is a terminal symbol and the others are non-terminal symbols.

Transitions between modules in programs generated by Chameleon (GPT-4) on TabMWPQA. START is the start symbol, END is a terminal symbol and the others are non-terminal symbols.

Tools called in the generated programs from Chameleon (ChatGPT) and Chameleon (GPT-4) on ScienceQA

Tools called in the generated programs from Chameleon (ChatGPT) and Chameleon (GPT-4) on TabMWP

Sample Responses Comparison

Chameleon (GPT-4)

Chameleon (ChatGPT)

TBA

Our work is featured by WorldofAI

Citation

If the paper inspires you and the data is used in your research, please cite us:@inproceedings{lu2023chameleon,

title={Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models},

author={Lu, Pan and Peng, Baolin and Cheng, Hao and Galley, Michel and Chang, Kai-Wei and Wu, Ying Nian and Zhu, Song-Chun and Gao, Jianfeng},

booktitle={The 37th Conference on Neural Information Processing Systems (NeurIPS)},

year={2023}

}